Andre Ye

I am an honors, Phi Beta Kappa, and Mary Gates scholar at the University of Washington studying philosophy and computer science, minoring in mathematics and history.

My current specific research interests are twofold: (i.) how can we design non-human intelligences to aid human philosophical practice and address metaphilosophical problems? (ii.) how can philosophical concerns and insights help us build better AI and human-AI interactions?

My general intellectual interests include phenomenology, philosophy of science, human-computer interaction, and computer vision.

Please see my research agenda and CV for more details.

I have written a few essays in philosophy, two books on deep learning, a little bit of fiction, and many data science articles.

My email is andreye [at] uw [dot] edu.

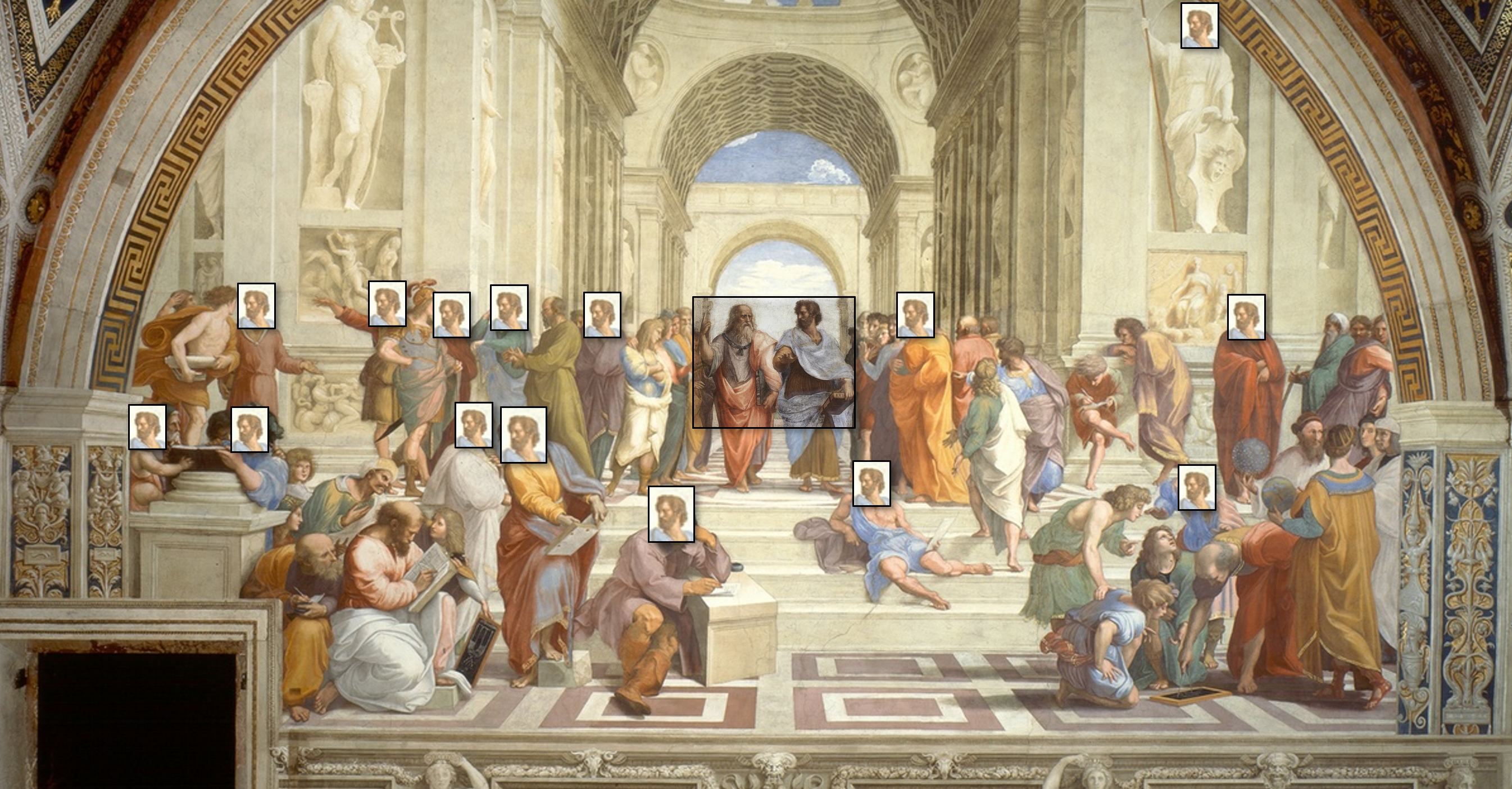

Composite image of The Disintegration of the Persistence of Memory (Dali), Edward Bellamy (GAN), Guernica (Picasso), still from Battleship Potemkin (Eisenstein), Relativity (Escher).

news

| Jun 28, 2024 | My work has been covered in an Allen School news post! |

|---|---|

| May 14, 2024 | I am honored to have been named the UW Philosophy Department’s 2023-2024 Outstanding Undergraduate Scholar. |

| Apr 7, 2024 | I presented my paper “And then the Hammer Broke: Reflections on Machine Ethics from Feminist Philosophy of Science” at the Pacific University Philosophy Conference in Forest Grove, Oregon! Read the paper on arXiv. |

| Dec 22, 2023 | I am a Phi Beta Kappa scholar, sponsored by the Philosophy Department. |

| Dec 20, 2023 | I am a finalist for the CRA Outstanding Undergrad Research Award! |